Thursday, January 31, 2019

Notes on Kuzmin's "Full Photogrammetry Guide for 3D Artists"

Full Photogrammetry Guide for 3D Artists

taken from:

https://80.lv/articles/full-photogrammetry-guide-for-3d-artists/

This guide is very helpful and thorough. Kuzmin uses Reality Capture, plus some external software like ZBrush.

Wednesday, January 30, 2019

Photogrammetry vs Scanning

I spoke to Jonathan Mercado an alumni of the Drexel DIGM Masters program and he had also asked the question, laser scanners or photogrammetry?

Benefits of photogrammetry:

The benefit of 3D scanning is that you can see the model in realtime, whereas in photogrammetry you have to wait for the reconstruction process.

He sent me some of his documentation as well.

So that settles it! This is not to say scanning is completely obsolete-- I do think there are specific use cases where scanners are more applicable. But I have also confirmed my suspicions that photogrammetry is the way to go, particularly for the creative fields.

Benefits of photogrammetry:

- much cheaper than 3D scanning hardware

- much faster prep time

- non destructive

- produces a crisper albedo

The benefit of 3D scanning is that you can see the model in realtime, whereas in photogrammetry you have to wait for the reconstruction process.

He sent me some of his documentation as well.

So that settles it! This is not to say scanning is completely obsolete-- I do think there are specific use cases where scanners are more applicable. But I have also confirmed my suspicions that photogrammetry is the way to go, particularly for the creative fields.

Tuesday, January 29, 2019

Testing different stitching softwares

After baby sitting the Lichen Angel process over last weekend (clocking in at probably 30-40 hours) I figured I should look into better methods. In general I would like to stay away from any rendering/processing time that runs more than 8-10 hours, aka overnight. A couple of people have already told me that Agisoft has not kept up with its competitors and advised me to look at RealityCapture.

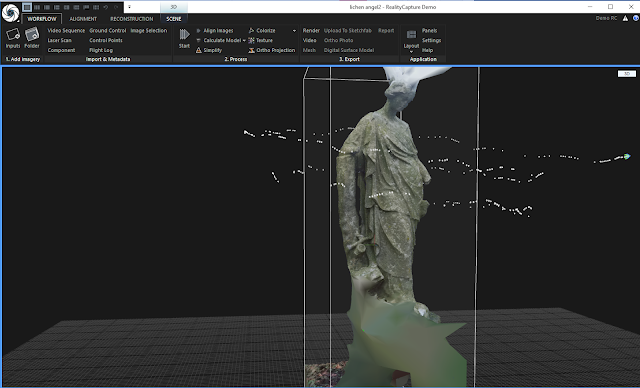

So I did-- I threw the same batch into RealityCapture and holy shit. In under five minutes I had a chunk of the same model in what looked like comparable, if not better quality.

So I threw the photoburst batch at it as well (384 photos, some are blurry, no preprocessing). In an hour I got this:

One option that I'm seriously considering is their Freelancer version, which is 100 Euro for one month, and for some reason $40/month on Steam.

I also looked at 3DFlow Zephyr, which was a similar story. Amazing demo-- processed the same batch (well, partial batch, clamped at 50 photos) in a matter of minutes. Their price points are in the thousands of dollars range.

I have tasted luxury and now I NEED it.

Here's everything else I've tried:

Autodesk ReCap is at the mercy of their cloud computing, but I found in general the turnaround time is about a day. Clamps picture quality, max 50 photos. Free education license.

Agisoft works but is very slow. Needs a lot of preprocessing, which they don't help you with. Temperamental, I've had it fail on me before.

And here's some opinions from an expert: https://3dscanexpert.com/realitycapture-photogrammetry-software-review/

An Essay on Walking Simulators

Another source of my frustrations last week has been that while researching/ problem solving technical issues, I feel like I am forgetting why I am even excited about environment art in the first place. This feeling isn't new to me, it is something I struggle with as a digital artist a lot. So I thought I might write about some of my favorite games.

Monday, January 28, 2019

After Class, Week 04

- capture lighting information while on set

- keep your end goal in mind while researching processes. budget, artistic endeavor

- get out of research phase.

- keep your end goal in mind while researching processes. budget, artistic endeavor

- get out of research phase.

- develop roadmap for term. produce work on a weekly basis. you might not have your contribution to the field figured out yet, but figure out your project.

- next week is week 5!

- next week is week 5!

Next steps--

Define arsenal of tools in a separate post. Equipment and documentation. Focus on equipment that would be accessible to you post graduation.

Find location (easy to access, easy to schedule reshoots, natural elements, low on complexities/variables)

Schedule-- do you need extra person?

Saturday, January 26, 2019

Project Pitch

Goal: Build a virtual space based off a real world location.

- Scout location

I'm looking for a room sized space with lots of natural elements. Maybe outside, or a greenhouse. Something with interesting design and beautiful aura. Determine scanning needs, object separations before shoot.

I'm looking for a room sized space with lots of natural elements. Maybe outside, or a greenhouse. Something with interesting design and beautiful aura. Determine scanning needs, object separations before shoot.

- Assemble equipment

Determine what can/cannot be taken out in the field. Optimize for a 1-2 person team. Might need a car. Harddrive? Plan for reshoot.

Determine what can/cannot be taken out in the field. Optimize for a 1-2 person team. Might need a car. Harddrive? Plan for reshoot.

- Obtain data

In addition to 3D data, takes notes on location

In addition to 3D data, takes notes on location

- Process data

- Build a walkable level

Using the assets generated from the acquired data to recreate the space in a game engine. Should demonstrate a beautiful render and accurate capture of the real world space. Would be nice to include some additional atmospheric design such as dust particles. As a flex goal, would like to target a room scale VR build.

--

I would like to work towards this end project in the next few weeks, aiming to complete before the end of the quarter. First time running through this process it probably makes sense to choose a location close to university city, but would like eventually to scout further away.

Friday, January 25, 2019

End of Week 03

It is Friday. Yesterday I shot some photos in the Woodlands Cemetary, it was an overcast day and the stone is weathered and covered in lichens, making ideal conditions for subjects. While I'm reflecting here, those batches are processing away.

Thursday, January 24, 2019

Scanning Bugs

I found a paper that documents exactly what I need to continue with my cicada specimen, I'm very excited I found it! Scholarly research can be pretty nifty, who knew.

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0094346

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0094346

The Looking Glass

There is a desktop hologram display device in the ACELab called "The Looking Glass".

It is essentially a small monitor which projects 40 simultaneous images, which creates the 'hologram'. It's actually pretty impressive, there is a real sense of depth and parallax in the 3 inch deep glass. Although it seems unrelated to my research at the moment, I have been having fun setting up and playing the demos. Many of the demo apps utilize a leap motion control as well.

Wednesday, January 23, 2019

Asking for help

Google can't do everything, so I've been asking around for thoughts and opinions on point based rendering.

Friday, January 18, 2019

End of Week 2

I've continued setting up some photogrammetry processes to see if I can obtain any successful stitches. I'm comparing Agisoft Photoscan with Autodesk's ReCap. The main difference is Agisoft processes locally on your machine and does not put limits on how much you can feed it (for better or worse). There is a batch process option which includes thoughtful touches like "save after each step" and a final export option, so I'm comfortable throwing photo sets at it to run overnight. I just learned you can leverage the GPU, kicking myself for not checking sooner, but for my next round. ReCap processes on Autodesk's cloud, but has limits on 100 photos per object (and I suspect they get compressed). I don't expect great models yet, but I'm letting them process anyways.

Meanwhile, I am still researching emergent technologies in 3D data capture and in point based rendering.

I have yet to find someone who specializes in point based rendering so I'll have to do some more serious asking. I am not convinced yet there is an advantage-- none of the workflows that I have researched use this. Yes, it would save a lot of steps optimizing the data into polygons and maps.

But I need to confirm that:

1) points can be rendered and lit beautifully, in realtime

2) and they don't look like points

3) I don't need to build custom tools to achieve this.

Otherwise, I'm sticking to mesh based rendering.

I like the way the Wikipedia page for Volumetric Video is structured, although I might call in Volumetric Capture but let's start here: https://en.wikipedia.org/wiki/Volumetric_video

So under History we have: CG, Lasers, Kinect, Photogram, VR, and Light Field Photography. This last one is the most unfamiliar to me, although a couple times it has been brought up to me. I mentioned this again to Nick and he plopped a Lytro camera on my desk. Lytro is now Raytrix, and I'm not even sure this device is supported. It is a mysterious hunk of magical tech that I don't know what to do with for now. Perhaps, if it is useful, it will make photo scanning easier and more accurate.

I originally stated, last semester, I was uninterested in what I was generalizing as 'performance capture'. Researching in this direction is making me second guess that statement.

Meanwhile, I am still researching emergent technologies in 3D data capture and in point based rendering.

Point Based Rendering--

I tried the Unity package "PCX" which handles point cloud .stl files, and was able to pull in their example file. Normally Unity does not support .stl so that alone was kind of cool. The test file was a large Azalea bush (sidenote, hosted on Sketchfab). There are two shaders, point and disc. Both looked like, well, point clouds. I kept thinking, if only there was some way to draw some kind of surface to connect all these points.I have yet to find someone who specializes in point based rendering so I'll have to do some more serious asking. I am not convinced yet there is an advantage-- none of the workflows that I have researched use this. Yes, it would save a lot of steps optimizing the data into polygons and maps.

But I need to confirm that:

1) points can be rendered and lit beautifully, in realtime

2) and they don't look like points

3) I don't need to build custom tools to achieve this.

Otherwise, I'm sticking to mesh based rendering.

3D Data Capture--

This is where my brain starts melting a little.I like the way the Wikipedia page for Volumetric Video is structured, although I might call in Volumetric Capture but let's start here: https://en.wikipedia.org/wiki/Volumetric_video

So under History we have: CG, Lasers, Kinect, Photogram, VR, and Light Field Photography. This last one is the most unfamiliar to me, although a couple times it has been brought up to me. I mentioned this again to Nick and he plopped a Lytro camera on my desk. Lytro is now Raytrix, and I'm not even sure this device is supported. It is a mysterious hunk of magical tech that I don't know what to do with for now. Perhaps, if it is useful, it will make photo scanning easier and more accurate.

I originally stated, last semester, I was uninterested in what I was generalizing as 'performance capture'. Researching in this direction is making me second guess that statement.

Thursday, January 17, 2019

Rendering pointclouds

Collecting google research on solutions for realtime rendering point clouds.

Edit (1/31) Some more links

https://www.atomontage.com/

https://medium.com/@EightyLevel/how-voxels-became-the-next-big-thing-4eb9665cd13a

Euclidean-

No longer advertising game engine solutions, instead has two products that are aimed a more general scanning audience. The "Unlimited Detail" render engine is now a listed as a feature within these software solutions. I've download trials of both and will be poking around.

Atom View

Another engine like Euclidean that uses 'atoms', has beautiful demos but at the moment only advertises a private beta. This article does a comparison study.

Potree for WebGL

Interesting optimizing for web browser, might be useful elsewhere.

PCX for Unity

Looks promising, will also install and try out.

Point Cloud Plugin for Unreal

https://pointcloudplugin.com/

Straight to the point.

Point Cloud Plugin for Unreal

https://pointcloudplugin.com/

Straight to the point.

GigaVoxels for CUDA/GLSL

I probably won't use this, but is interesting to read about.

Faro VR Generator for Oculus

Third party tool to bring pointclouds into VR. Pretty stunning results.

This week I'm asking: are points better than polys? Are points fundamentally different from verts, voxels, sprites, "atoms"? And ultimately, how big is TOO big for massive pointcloud datasets in realtime?

And on a tangent, Maya 2019 has just been released with some Very Exciting updates to their viewport. Everything's going realtime nerds!

Edit (1/31) Some more links

https://www.atomontage.com/

https://medium.com/@EightyLevel/how-voxels-became-the-next-big-thing-4eb9665cd13a

Wednesday, January 16, 2019

After class, week 2

Yesterday I received a lot of feedback chew on, considering we're entering week two, it's a good time to re-orient and to really evaluate if I'm working in the right direction.

There are essentially two sides to 3D scanning: data acquisition and processing (and post processing).

On the data acquisition side, I can see where my tests are going. Continuing to become familiar with the equipment is important and I still would like to get a grasp on what tools are appropriate for when. I am still asking the question from my previous post, what are the advantages of laser scanners over photogrammetry, if any at all. I have not successfully captured a prop object yet.

That being said, perfecting the scanning process IS something I can continue to work on in parallel to other research. The processing side has many more unknowns to me.

This might be a good time to post some of my references.

- First, virtualvizcaya.org has a great article on "What is 3D Documentation", which emphasizes the need for 3D documenting in cultural spaces + explains a range of 3D scanning/ capture techniques and their different scenarios. Not to mention it features a massive point cloud of the whole estate rendered right in your browser (note: WebGL with Potree).

- Second, The Vanishing of Ethan Carter production blog boasted, in 2014, that they were starting a "visual revolution" for games. A small indie team utilized photogrammetry to capture real world assets and locations for their spooky walking simulator. (The game itself was rather disappointing! I have a lot of opinions about that need their own post.)

- Lastly, Substance Painter has been publishing a lot of great tutorials on scanning processes as well, such as this one on recreating ivy leaves. In particular, I am excited to use their delighting tool to generate a true diffuse map out of photo data.

Now for the "other" research... rendering. It is one thing to optimize the scan data into 3D polygonal models, for use in realtime game engines or prerendered footage, but in class Paul brought up a completely new concept to me: rendering points. There are engines that will directly render points instead of polys, an example would be the Vizcaya site above. This site uses Potree, an opensource WebGL renderer. While Potree is immediately noticeable low resolution, it IS impressive considering it's on web/mobile. The Vizcaya dataset is public domain as well, so one of my next steps might be downloading, installing and poking around in this existing project.

Rendering points is still so unfamiliar to me, I have no, ahem, point of reference to begin with. I need to start from scratch. Paul also mentioned Euclidean renderer, which has an impressively gossipy paper trail of information that can be found lurking around the web.

Monday, January 14, 2019

New toys

My pop up light tent and rotating stand came in today.

Testing with the Eva M, it seems to be helping. I need a spotlight setup somewhere in the lab and I need to steam the backdrop-- I've noticed that sometimes stitching errors occur now that the background is no longer aligned with the rotating object.

Saturday, January 12, 2019

End of Week 01

Collecting my thoughts--

This week I opened up the two Artec scanners, the higher end Space Spider and the more mid-range Eva M. Both are older models that have been in storage. These are handheld scanners with laser/camera hardware.

Thursday, January 10, 2019

Wednesday, January 9, 2019

Taking Inventory

Today was the first day I got access to the ACELab and was introduced to the library of capture equipment that is available to the department. I say 'capture equipment' because this includes DSLR cameras, 360 cameras, 3D scanners, the motion capture studio space, and the list goes on. In particular, there is this workstation with hefty specs.

The side panel is removed, I assume to show off his three glowing NVIDIA graphics cards.

I am sitting under my good friend Val's thesis poster, which she presented at SIGGRAPH (and other places) three years ago. I remember that in 2020 SIGGRAPH will be in Washington DC. I hope to submit my work that year, it is rare to have the conference hosted on the east coast.

In chatting with my independent study advisor Nick, we discuss the plan for what to accomplish this term and land on a still fairly vague idea to begin researching photogrammetry process. He pulls three laser scanners out of storage. One of them, the desktop NextEngine scanner, is familiar to me from undergrad where I worked on digitally fabricated sculptures as a part of my fine arts minor.

I end up with a question that doesn't have a readily available answer-- What is the advantage of laser scanning over photogrammetry? For one thing, lasers do not capture color data, so to make up for this many laser scanners have a camera (or cameras) built in anyway. Yes, lasers can be more accurate, but the cost of a high res device easily goes into the tens of thousands, where the cost of a high definition camera might stay affordable at a professional level for the same output resolution.

I think I have arrived at my first experiments: to find the best method of acquiring scan data, comparing photogrammetry to the available laser scanners. When I say best, it is important to note that every scanned object/space is different and there is no one size fits all. Every method will have a different accuracy vs time vs cost. According to the Star Wars Battlefronts team, they emphasize that they use a combination of modeling methods including photogrammetry, scanning, sculpting and box modeling depending on the needs of the space.

This week I hope to flesh out a better idea of an end deliverable to work towards during this term, however tomorrow I know where I will start.

Subscribe to:

Posts (Atom)

-

I'm very excited to talk about Substance Alchemist, it has really solved a huge chapter in my reality capture explorations. The method...

-

I had a successful shoot day! It was rather bright outside, so I had to deal with a lot of harsh sun and directional shadows. I took a lot...

-

Google can't do everything, so I've been asking around for thoughts and opinions on point based rendering.