https://drive.google.com/open?id=1yHXE2Gp5NKyeFHSFZbfRDL9HpJDpTSiC

Note the screenshots are totally out of order. I've added captions for clarity

Unity's expert guide goes into various definitions of capturing different types of objects and emphasize collected different pieces. So I decided to strategize and break up the space according to... what felt right... and now I've learned what worked and what didn't.

I've talked about preparing game ready assets in my previous projects.

These are the steps I used this time:

1. In Zephyr, make a clean selection of the dense mesh. Generate mesh, then texture mesh.

2. Decimate the mesh to something reasonable. (*you could export out and retopo and UV here) Generate textured mesh again for the lo poly mesh.

3. Export both, and enable resize to single texture.

4. In substance designer, load both.

5. Open the lo poly mesh in the baker. Assign the high poly mesh.

6. Bake all maps

7. Import lo poly mesh and all maps into unity

8. Create new material (or dupe existing material to reuse settings) plug in maps

--

Overall, some combinations worked better than others.

|

| this one looks okay |

|

| I thought I could optimize this asset by capturing around the edges... |

|

| ...and filling in with a material. In reality, I'm not saving that many polys. |

|

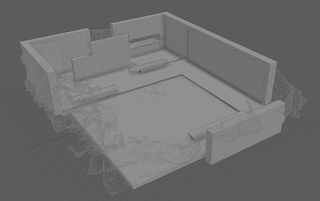

| scan vs model |

|

| maybe both? |

|

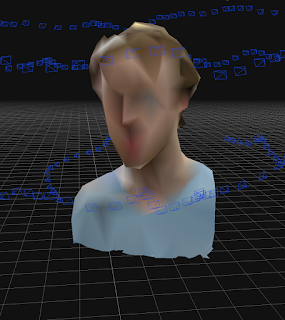

| surprisingly pleased about this one, however the scan quality is lacking. but the two decorative pieces merged well. |

|

| too many things overlapping... |

Things that are going wrong...

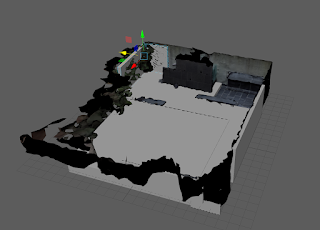

- My world scale is so completely wrong. Photogrammetry is not a precise method and so, the reconstructions were all over the place. I should have centered all my assets before exporting. I found myself nudging rotations back and forth, baaaack and fooorth, scaling by 70-80% down, carefully eyeballing everything into place. Plus the Z-up axis didn't help. Actually I'm really glad I had the 360 reconstruction (layout pass) to help place everything.

- The materials I generated in Alchemist didn't totally translate over to Unity. I kind of expected that, but one thing I got really stuck on is the vertex displacements. The height maps look so pretty in Substance! And after some research, I realized you can in fact, do real geo displacements

https://blogs.unity3d.com/2019/02/12/creating-an-interactive-vertex-effect-using-shader-graph/

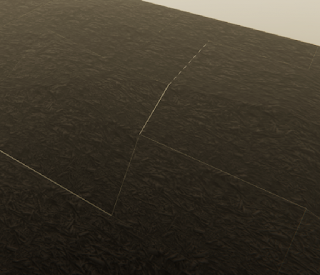

But right now I'm stuck on this issuethe height separates the faces

In which, increasing the height value does not displace the silhouette of the mesh, but instead, causes these separations between faces. I need to ask someone who knows more about shader devving to clarify whats going on. - The other issue I had with material scanning was texel density, again, due to mismatched world scale elements. Currently, if the game camera is more than a few feet away from one of the materials I made, the repetitions become obvious. While yes, it is tileable and somewhat parametric document, I can't make up for the texel scale issue. That is, if I want my bricks to be brick sized, the material has to repeat dozens of times. Even in something as random as the concrete (which had an extra randomizing filter on it) the repetitions are obvious when they're far enough away.

I should have listened to Unity, and photographed a meter square to ensure a meter of texel density. - Foliage is totally out of scope.

|

| ARGH! |

Things that went right

- The HDRP is a dream to work with. As the director of one of Unity's short film demos describes it: "High Definition Render Pipeline (HDRP), which — from an artist perspective — just automatically makes things look nice." https://blog.siggraph.org/2019/03/an-irresistible-way-to-make-films-using-real-time-technology-for-book-of-the-dead.html/

- Actually this is a really exciting observation for the state of computer graphics... tools like the HDRP and Alchemist are helping things just automatically look nice. I'm here for it.

- She also uses the word 'opportunistic' to talk about her work. I love the idea of games being spontaneous, opportunistic, since it is traditionally such slow and unforgiving work.

- The materials were gorgeous to look at anyways, and the Substance plugin was great to work with. Tri-planar projection solves SO many problems-- it improves on UV mapping (doesn't quite erase it except in simple scenarios), it unifies the world scale (so multiple assets can share the same material, plus a mesh can be scaled up / down without affecting the material). There are more features to talk about here, but all in all a total lifesaver. None of that back and forth targa export nonsense.

- The layout pass (the 360 quick pass reconstruction) was EXTREMELY helpful. It served as a blueprint of the space to iterate on.

General takeaways.

- Realizing most of these assets are better off modeled, like walls.

- Realizing that delighting is not just a 'clean' albedo, its also color matching and ensuring matched shaders.

- Realizing that polycounts are not as restrictive in my head

- Restraining myself from brute force cleaning assets... I know I can make things look good if I sink hours into it... my goal is to determine best practices, not output a portfolio piece.

Final polishing touches:

- Water? in the foutain?

- Obtain a HDR latlong image for the sky. Examples here:

https://assetstore.unity.com/packages/essentials/beta-projects/unity-hdri-pack-72511 - While I'm at it... actually I just wanna reshoot the whole thing again. This video from Gnomon https://www.youtube.com/watch?v=IU5XTtS6ALk demonstrates capturing an indoor, architectural space for VR. This trailer goes through the whole process in a timelapse.

Looking at the camera icons, he just brute force shot a ton of photos. We can see some individual assets are processed, but mainly, he's working with this giant dataset. This is kind of opposite to my approach this term so I'm really interested in replicating it, especially with the 360 camera.