I should also mention I've switched from working on the big machine to my personal laptop, so my graphics processing power has been cut in three (at least, haha). Still, my 15in Spectre is not too bad. I'd rather be targeting midrange PCs anyways.

The first thing I need to do is export all of my Alchemist materials out to something useable. I notice right away that I can't seem to consistently export 4K texture maps... Alchemist will crash. Although I was able to export two out of my ten materials. Perhaps a bug because it is still in Beta, or maybe my laptop. I notice 4K maps in the viewport will also crash. Either way, I'm stuck at 2K textures for now.

Another frustration is that I have NO idea where Alchemist is saving my working files, so the only way to get assets OUT is through the exporter. So at the moment, I cannot transfer my working documents to a more powerful computer (to rule out the weaker processing issue). To be fair, Allegorithmic warned us not to use their beta release in production, so I kinda asked for this.

Jumping ahead, later on I realize that the Substance Plugin for Unity reads SBSAR files. This is essentially a package of your texture maps. Its much easier / more accurate to implement these instead of the image files. Still though, I am clamped at 2K exports for whatever reason.

--

So now, I have a folder full of 2K SBSAR files. I did some investigating into current Unity project templates. I've been out of the game (pun intended!) for a little while now, so I kind of wanted to work on something more modern (again, probably asking for trouble).

I first looked into the High Definition Render Pipeline, which was used on the Fontainebleu demo (the same demo that published the expert guide on Photogrammetry for games).

I also looked into the Light Weight Render Pipeline, which is not the legacy renderer but instead, a newer and more optimized scriptable pipeline which targets a wider range of platforms, including mobile. Their demos seemed quite pretty as well, plus with some initial testing, ran totally fine on my laptop and played nicely with the Substance shaders. I chose this over the legacy renderer because the lighting is more sophisticated.

I went back and forth a lot here, and I'm still not totally convinced I'm settled into HDRP. I actually have three WIP versions started. One thing I was realllyyy hoping for was to get the shaders as close to the Substance preview as possible. Those sneaky bastards, the height maps really displace the geometry, which is not the case (as far as I know) in realtime rendering elsewhere. HDRP does have a height map channel and claims vertex displacement, but just like normal maps, it does not seem to alter the silhouette

The deciding factor was ultimately, that I really wanted the lighting quality and to follow the expert guide.

So now, I drag drop my ten SBSAR materials into my Unity project.

Notice the pink? So, the Substance plugin brings in your material as a standard shader. The standard shader belongs to the legacy renderer, and canNOT be rendered with the HDRP. So I need to open up each one and change it to the HDRP "Lit" shader, set the quality to 2048, and double check the maps. Most cases I had to plug them all back in for some reason.

Unfortunately, doing this breaks the thumbnail in the asset bin. :-(

This does not affect the material.

Concrete is expanded to show the HDRP material nested in the SBSAR ^^

For the frosted glass, first the Material Type needs to be set to Translucent.

Then, the base color needs an alpha value of <1. Simply click on the color picker icon and slide the A slider down to taste.

Another setting I really like in the SBSAR properties is the ability to switch between UV and Triplanar projection. Triplanar works great for static meshes, and essentially takes away the need for UV unwrapping with the click of a button.

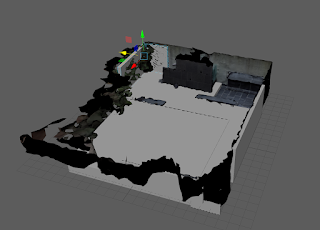

I mock up a quick greybox version of the space based on the layout geo (generated with the 360 camera)

Honestly I could have done this step in Unity, but I work faster in Maya. I limit myself to about 30 minutes on this step. I use Maya's Game Exporter to export all objects as seperate fbx's (check plugins list if you don't see it under File).

I import back into Unity.

Couple of noob notes for myself RE moving an entire scene into Unity

Maya to Unity:

1. do not freeze transforms in Maya (Modify>bake pivot to get transforms back)

2. export all separately using Game Exporter

3. import into Assets. select all and check the Convet Units checkbox

4. drag into hierarchy. Everything should be in its place

Again, first import the rough layout geo... (iterative design!)

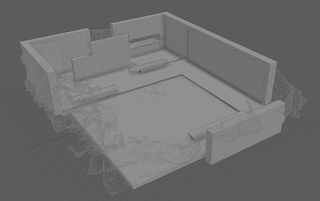

Then everything else. I'm glossing over a few steps here, but I did a quick pass at applying all the materials and tweaking them a bit.

and this is a screengrab, without the layout geo.

A couple of obvious things stick out right away.

First, there are obviously tiling materials. This is a little in part that they are placeholder, and I'm using them on objects that a much larger than what I intend for the final assembly.

Still though, I had a lot of problems with scale. I had to manually tweak each material to look right. I realize that I really needed to take real world measurements and plan ahead for texel density. Unity says to take a 1 meter square of any material scan. I grabbed whatever I could get.

Second, my placeholder geo kinda sucks. haha. I would have been better off with cubes I think? It's actually related to the scale issue, I added bevels without thinking about how noticeable they look.

The postives are, its starting to come together! The rendering and post processing are really really nice. It does't look like a typical "unity student project" that I have noticed is really common in Unity games (even Overcooked has symptoms of this... something about lacking antialiasing, color correction, dinky looking shadows and shaders that falloff to black? I can't totally put my finger on it but there's definitely a look to the legacy renderer.)

Walking around the space is starting to feel like the space. Getting up close to the bricks is actually kind of exciting.

Next post I'm going to implement the scanned assets... stay tuned!